The major challenge with automated integration testing for a web player via browser automation tools is that these tools better serve the purposes of web applications such as e-commerce sites, single page applications, and social networks. How do you take a product like JW Player which is embedded on over 2 million websites where publishers are always coming up with unique ways to use the player, and build an automated testing framework that will ensure the quality of that player? I would like to walk through how we’ve taken on that challenge.

Test Harness

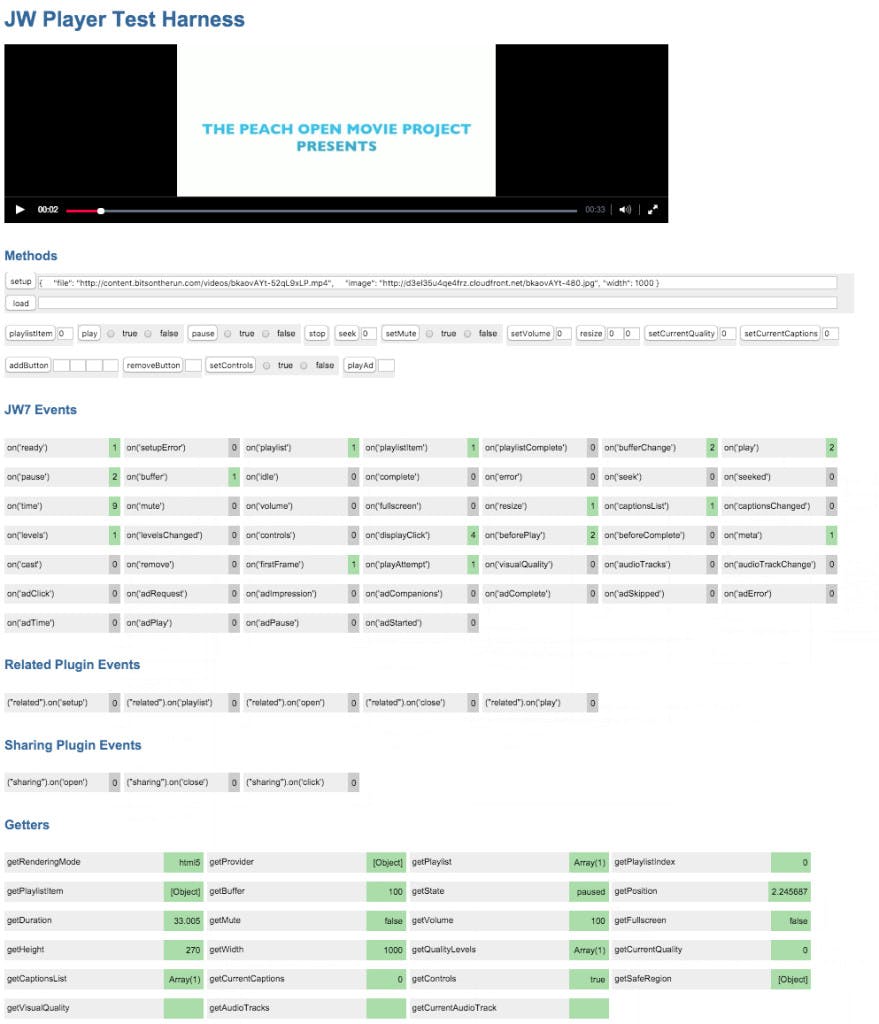

We’ve created a Test Harness which allows us to setup a player using any valid JSON configuration and exposes the Player API in the DOM on an HTML form, providing a visual representation of the API. The entire player event history (count of events being triggered and event properties) are being populated as actions are performed on the player. We use the browser automation library, Capybara (Ruby Gem) to interact with the test harness form as well as the player. Capybara validates the DOM elements of the harness form (checking text value of the divs containing event count/property history) as we interact with the player.

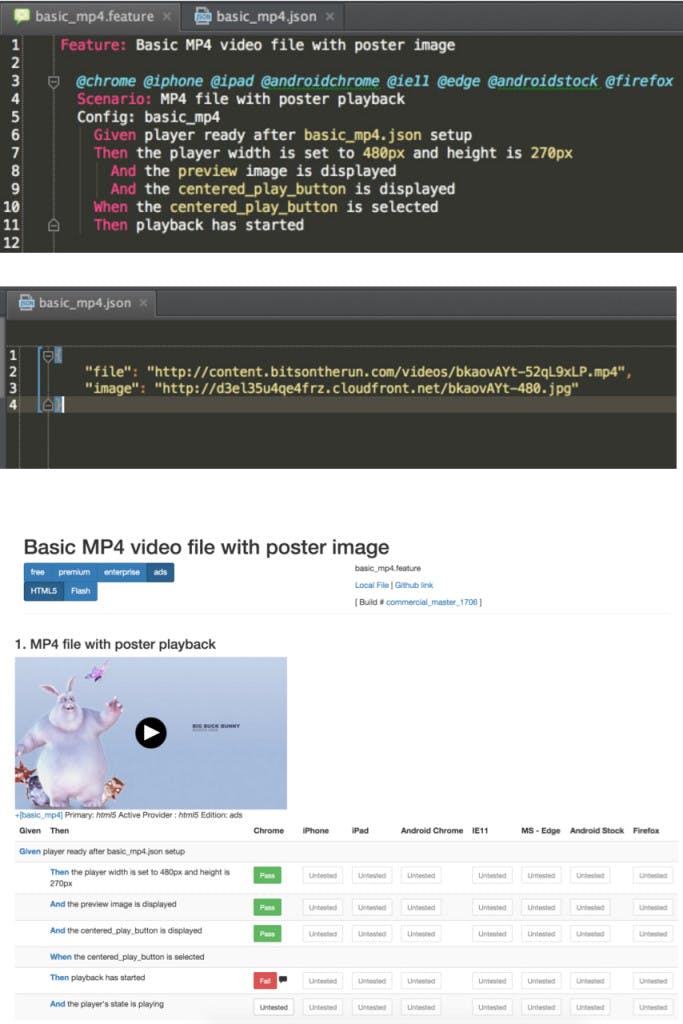

Capybara’s API was designed to handle the challenges of working with asynchronous javascript which made it a good choice for us over other Selenium API Wrappers. What’s quite interesting and challenging about our automated test workflow is the decisions we make to assert steps in our test scenarios. In most web applications, a user would follow various actions to land on a certain page and successfully complete an action. A video player has no such completion path and we have to make internal determinations on what assertions would consider a step to be passed or failed. Take for example, how can we determine whether the player has actually started playback after we clicked on the play button? The approach we take is to consider the various JW Player API Events and Getters that can help us determine playback actually started. We’re also testing against the JW Player’s UI, which has been designed in JW7 in a way in which we take advantage of validating for the presence or lack of presence of css flags. In order to create and run scenarios we use Cucumber. Here is an example of a very basic Cucumber test scenario we automate:

Scenario: MP4 Playback

Given I set up the player on the harness page with mp4-playlist.json

When I click on the centered play button

Then playback begins

So what is actually happening under the hood when these steps are being executed?

The “Given” step is where we are loading the test harness page in the browser. We then read the JSON file which contains a player configuration and set up the player with that configuration. The JSON file will consist of a playlist and may also include options such as an ad schedule or plugin. Before we go to the next step we want to validate that the player is actually properly set up by checking that the jwplayer api on(‘ready’) event was triggered once in the harness.

The “When” step is where we perform a click action on the css selector associated to the play button displayed on the player. We are using the Site Prism page object library to encapsulate all our test methods and css selectors available to us for the player. We can perform actions such as clicks, hovers, keyboard commands, visibility validations, and some more advanced interactions such as dragging the control bar scrubber from left to right to perform seek actions.

The “Then” step is where we have to make more difficult decisions on a successful playback attempt. This is where we really take advantage of the harness event history. We verify which components of our API should be used to determine that playback began. For this particular example we determinate that on(‘buffer’), on(‘beforePlay’), on(‘play’), on(‘time’) events are all being triggered and populated in the DOM. For some of these events we verify the exact count of the events firing to help us find potential bugs with duplicated event calls. We then verify that our API Getter for getting state of the player is returning “playing.” We can also make a UI validation on the player to verify that the time slider text in the control bar is updating. If you take a look at the screenshot above of the test harness, you can observe the different events being triggered and the getters for that particular player test.

Test Management – “Squash”

Some time back when we decided to use Cucumber, we also decided that we would write our manual test cases with Cucumber. We liked the way the Gherkin language made us follow a behavioral flow and it provided us a standard we can follow when writing new test cases. This is really helpful when you have an entire team contributing to test case writing. Even with this approach we still had test management pains. The biggest pain, which many engineers can relate to is using Spreadsheets to manage regression test plans.

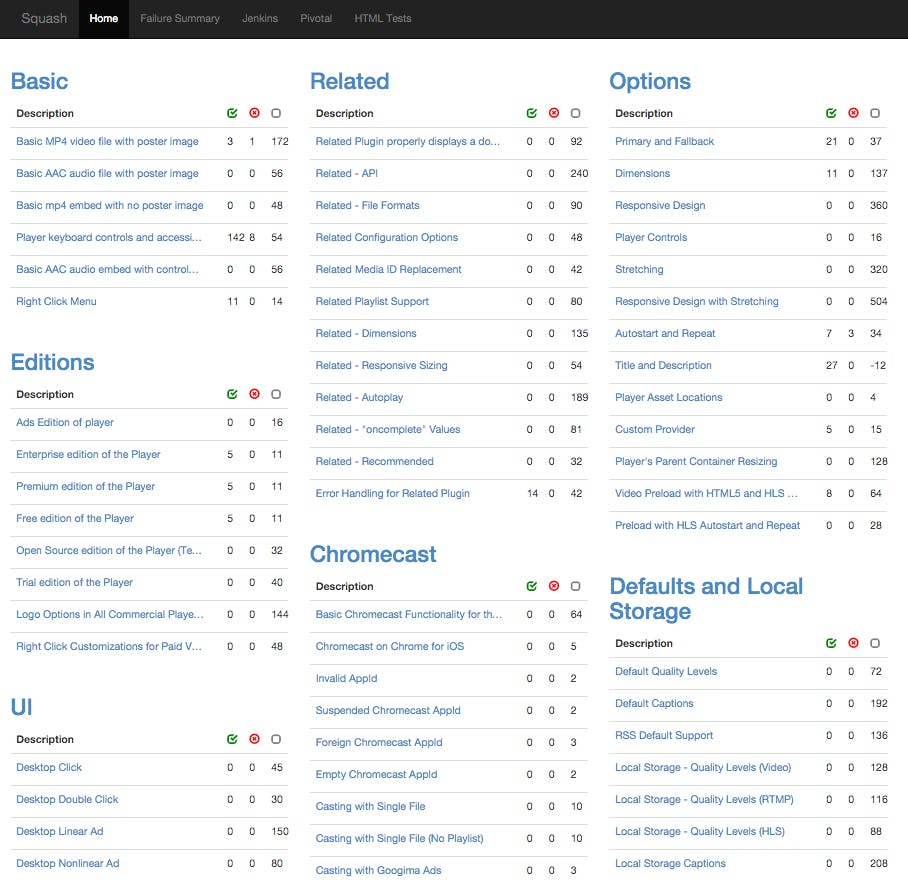

Our player team software engineer, Donato Borrello, created a test management solution for our team named “Squash” that moved us away from relying on spreadsheets. Squash is a single page app which manages all of our manual cucumber tests. Squash parses Cucumber feature files and generates an html table for each scenario where test runs can be performed against the scenario. Squash will also embed a player configuration specific to the test case above the table. If you take a look at the screenshots below you can see how it all comes together. First you create a test scenario in a Cucumber feature file. Above the scenario you provide the browser tags for the browsers supported for this particular scenario. We then add a line below the scenario title which does a lookup for a JSON config with the file name provided after “Config.” Once that is all done we are all set to go and test!

Squash has been a huge success in helping us move away from the days of spreadsheet management. We’ve also been able to find synergies between Squash and our automated scenarios. Since we’re using Cucumber for both our manual and automated tests, we have been including our automated tests in Squash. When we run our automated tests, we take advantage of Cucumber’s hooks and make a request to the Squash API with the appropriate pass or fail status after the scenario runs. This hybrid approach has helped team members who participate in adding manual test cases to get a better understanding of some commonly used automated step patterns so that when they do add new manual tests, the test can potentially already have multiple automated steps, and I can thank them for making my life easier! It may still seem to be a strange use case to include automated test reporting as part of your manual testing infrastructure, but consider the fact that we have over 700 test cases of which ~200 are currently automated, then multiply that by all supported browsers, and note that some supported browsers such as Android Stock currently have no automated test coverage.

What’s Next?

Visual Regression Testing – One of our next big goals is to add visual regression testing into our framework. There are only so so many test cases that we feel confident enough to automate with our existing test infrastructure before we need visual feedback. We have recently evaluated Applitools and have found it to be a great solution with minimal time spent on framework integration. We plan to continue to evaluate it and progressively add visual tests where it makes the most sense.

Mobile Device Coverage – Our tests are currently geared towards automating against a desktop browser environment and one of our goals is to step up our game in getting more automated coverage against mobile devices. One of the challenges with this is that we do not testing with emulators which means our automated tests will need to run with real devices.

And of course, we’re always looking to add more and more automated tests! We are making big strides in our automation efforts and will have more to talk about soon!

Danny Finkelstein, Test Automation Engineer, JW Player