Over half a billion videos are watched on JW Player video player every day resulting in about 7 billion events a day which generates approximately 1.5 to 2 terabytes of compressed data every day. We, in the data team here at JW Player, have built various batch and real time pipelines to crunch this data in order to provide analytics to our customers. For more details about our infrastructure, you can look at JW at Scale and Fast String Matching. In this post, I am going to discuss how we got Hive with Tez running in our batch processing pipelines.

All of our pipelines run on AWS and a significant portion of our daily batch pipelines code is written in Hive. These pipelines run from 1 to 10 hours every day to clean and then aggregate this data. We have been looking ways to optimize these pipelines.

Tez

We recently came across Tez, which is a framework for YARN-based data processing applications in Hadoop. Tez generalizes the MapReduce paradigm to a more powerful framework based on expressing computations as a complex dataflow graph. One can run any Hive or Pig job using the Tez engine without any modification to the code. We have been reading a lot about how Tez can help boost performance and hence we decided to give it a try and see how it could potentially fit in our pipelines.

Prerequisites for running Tez with Hive:

Hadoop Cluster with YARN framework

Hive-0.13.1 or later version

Tez installed and configured on hadoop cluster

Experiments

We used Boto project’s Python API to launch Tez EMR cluster. Amazon has open sourced Tez bootstrapping script (though it’s an older version) and we used it to bootstrap Tez on EMR cluster for our initial testing. Below is a sample script to launch EMR cluster with Tez:

https://gist.github.com/rohitgarg/9c8071fc7c9eef6de879

After installing and configuring Tez on EMR cluster, one can easily run Hive tasks using Tez query engine by just setting the following property before running the query:

SET hive.execution.engine=tez;

We ran 2 tests to see if Tez can run on a large dataset like ours:

Test 1

Data Size of test task: 930 MB

Cluster Composition: m1.medium master node, 3 r3.8xlarge core nodes, 4 r3.8xlarge task nodes

Memory: 1.7 TB

CPU core’s: 224

Test Hive Query: FROM table1 INSERT OVERWRITE TABLE table2 PARTITION (day={DATE}) SELECT column1, column2, COUNT(DISTINCT column4), SUM(column5), SUM(column3), SUM(column6) WHERE day={DATE} AND an_id IS NOT NULL AND column2 IS NOT NULL GROUP BY column1, column2;

The first test was successful and the query ran just fine without changing any default Tez settings.

Now, we wanted to test Tez query engine against a larger dataset since many of our Hive tasks run on hundreds of gigabytes of data (sometimes terabytes) every day.

Test 2

Data Size of test task: 2.5 TB

Cluster Composition: m1.xlarge master node, 4 r3.8xlarge core nodes, 2 r3.8xlarge task nodes

Memory: 1.3 TB

CPU cores: 192

Test Hive Query: INSERT OVERWRITE TABLE table2 PARTITION(day) SELECT column1, column2, column3, column4, column5, SUM(column6), SUM(column7), SUM(column8), SUM(column9), SUM(column10), day FROM table1 WHERE day={DATE} GROUP BY column1, column2, column3, column4, column5, day;

This query was failing initially with out of memory errors. At this point, we started poking around default Tez settings to figure out best way to redistribute memory among Yarn containers, application masters and tasks.

We modified the following Tez’s properties to get rid of memory errors:

tez.am.resource.memory.mb: amount of memory to be used by the AppMaster

tez.am.java.ops: AppMaster java process memory size

tez.am.launch.cmd-opts: Command line options that are provided during the launch of the Tez AppMaster process

tez.task.resource.memory.mb: The amount of memory to be used by launched tasks

tez.am.grouping.max-size: Upper size limit (in bytes) of a grouped split, to avoid generating an excessively large split

hive.tez.container.size: size of Tez container

hive.tez.java.opts: tez java process memory size

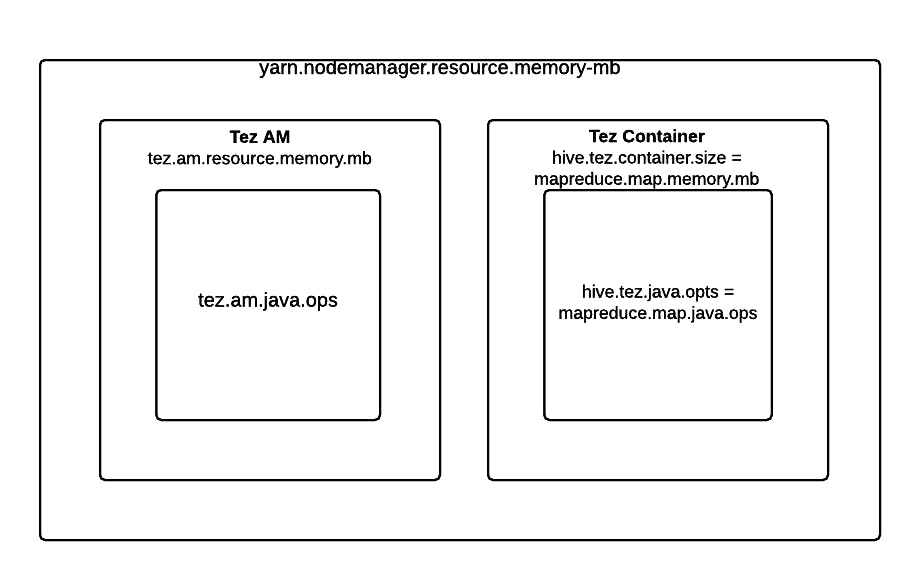

By default, hive.tez.container.size = mapreduce.map.memory.mb and hive.tez.java.opts = mapreduce.map.java.opts

Below is an illustration for various Tez memory settings:

We used the following formulae to guide us in determining YARN and MapReduce memory configurations:

Number of containers = min (2 * cores, 1.8 * disks, (Total available RAM) / min_container_size)

Reserved Memory = Memory for stack memory

Total available RAM = Total RAM of the cluster – Reserved Memory

Disks = Number of data disks per machine

min_container_size = Minimum container size (in RAM). Its value is dependent on RAM available

RAM-per-container = max(min_container_size, (Total Available RAM) / containers)

For example, for our cluster, we had 32 CPU cores, 244 GB RAM, and 2 disks per node.

Reserved Memory = 38 GB

Container Size = 2 GB

Available RAM = (244-38) GB = 206 GB

Number of containers = min (2*32, 1.8* 2, 206/2) = min (64,3.6, 103) = ~4

RAM-per-container = max (2, 206/4) = max (2, 51.5) = ~52 GB

We used the above calculations just to get some idea and tried out different configuration settings.

One such setting we tried is:

set tez.task.resource.memory.mb=10000;

set tez.am.resource.memory.mb=59205;

set tez.am.launch.cmd-opts =-Xmx47364m;

set hive.tez.container.size=59205;

set hive.tez.java.opts=-Xmx47364m;

set tez.am.grouping.max-size=36700160000;

The query finally ran after tweaking the above properties.

Conclusion

Hopefully, this post will help you get started with running Tez on EMR. We still have to benchmark its performance against Hive and see if it makes sense for us to use Tez. If anyone has any comments or suggestions, you can contact me at rohit@jwplayer.com or Linkedin. Last but not least, I would like to thank Tez core team for bringing such a good product to life.